AcuLa: Language Models as Semantic Teachers

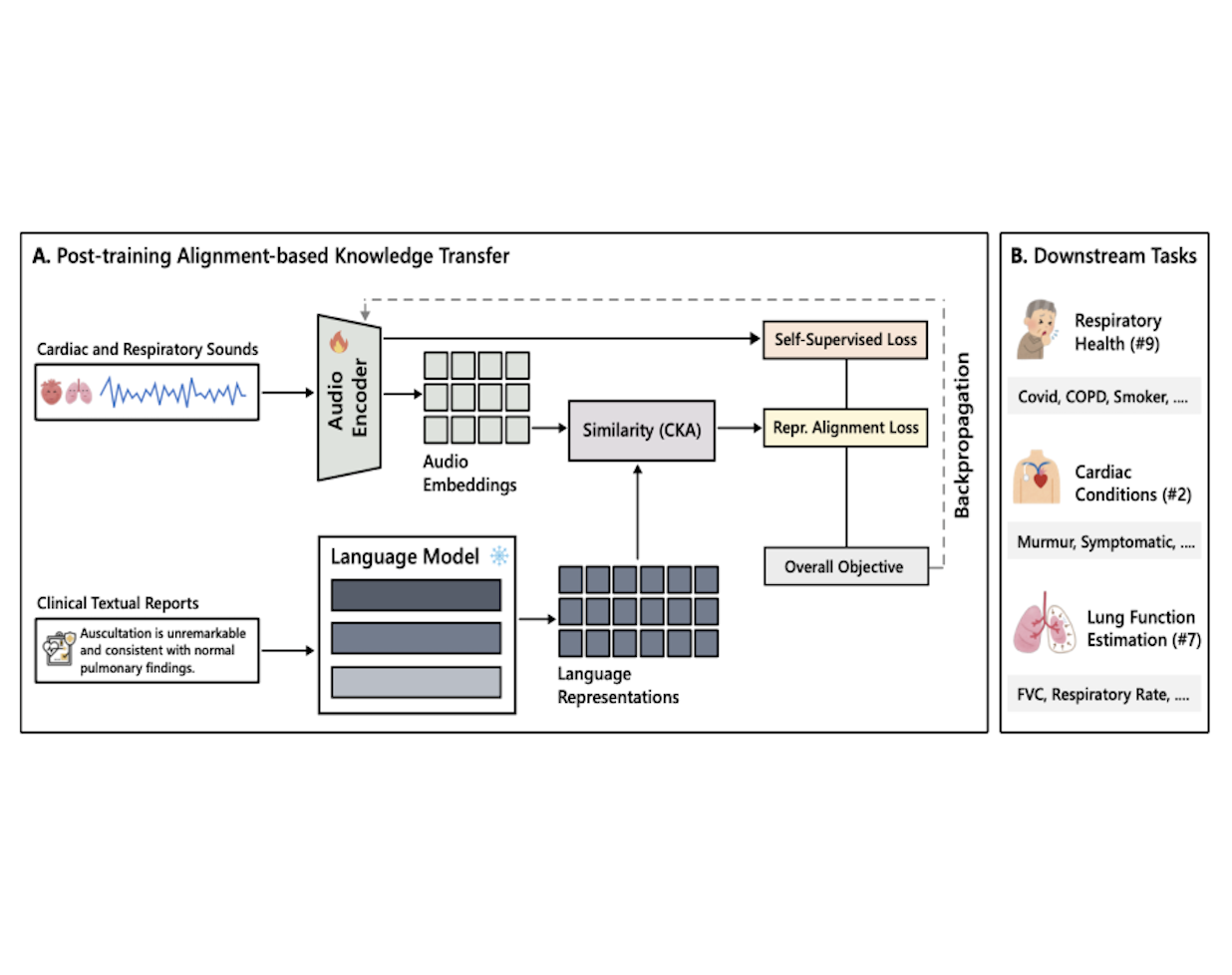

A lightweight post-training audio–language alignment framework that injects clinical semantics into medical audio encoders.

This project corresponds to our paper (Wang et al., 2025).

AcuLa (Audio–Clinical Understanding via Language Alignment) is a lightweight post-training framework that turns “acoustically strong but semantically blind” medical audio encoders into clinically-aware representations by aligning them with a frozen medical language model acting as a semantic teacher.

To scale audio–text pairing, we generate large-scale clinical reports from structured metadata using off-the-shelf LLMs, then align audio and text representations with a CKA-based representation alignment loss while preserving fine-grained acoustic cues via an additional self-supervised objective. We evaluate across 18 cardio-respiratory tasks and observe strong gains (e.g., mean AUROC improvements and large boosts on challenging cough-based diagnosis).